Modern LLMs don’t just answer questions; they absorb patterns from the web. This article explains how prompt learning AI works at a content level, how your pages act as indirect prompts through training and reinforcement signals and what makes content “LLM-beneficial.” You’ll learn when and how content is used, see real examples of prompt learning in action and get a clear framework for creating training-friendly pages that improve long-term AI visibility.

Prompt Learning Through Content

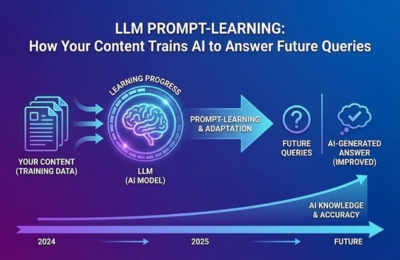

Prompt-learning is no longer limited to what a user types into an AI chat box. At scale, LLMs learn from the structure, clarity and consistency of published content across the web. Over time, high-quality pages influence how models summarize topics, define concepts and choose which brands or sources to reference.

This is where prompt learning AI intersects with content strategy. Instead of training models directly, brands influence AI outputs through indirect fine-tuning by publishing content that repeatedly teaches models how a topic should be explained.

This shift is critical for anyone focused on long-term visibility in systems like Google AI Overviews, ChatGPT, Gemini, Claude and Perplexity, where answers are generated, not ranked.

How AI Uses Existing Web Content as Training Prompts

Large language models are trained on a massive corpus of licensed data, human-created examples and publicly available web content. While models don’t “remember” individual pages, they learn patterns on how concepts are framed, which explanations are consistent and which sources appear authoritative.

From a content perspective, this means:

- Your pages act as implicit prompts

- Repeated structures become learned response templates

- Clear definitions influence default AI phrasing

For example, if dozens of high-quality pages consistently explain a concept using a specific definition-first format, LLMs begin to mirror that structure when answering future questions.

This is why concepts like indirect fine-tuning and LLM reinforcement matter. You are not training a private model but you are contributing signals that shape how public models respond over time.

This mechanism directly supports AIO, AEO & GEO strategies, where the goal is to become a reliable answer source, not just a ranked URL.

Conditions for Content to Be Used in Training

Not all content contributes equally. For a page to function as LLM-beneficial content, it must meet specific quality and structural conditions.

Key conditions include:

Clarity over creativity

- AI favors unambiguous explanations, not metaphor-heavy or vague copy.

Consistent topical framing

- Pages that stay tightly focused on one concept send stronger content training signals.

Structured information hierarchy

- Clear H1, H3 usage, definitions, lists and examples help models parse meaning.

Factual alignment across sections

- Contradictions weaken reinforcement and increase hallucination risk.

Public accessibility and crawlability

- Content must be indexable and readable by search and AI systems.

According to guidance referenced in OpenAI documentation, models are trained to generalize from patterns rather than memorize sources. This makes consistency and repetition across the ecosystem more influential than any single page.

Examples of Prompt Learning

Prompt learning through content shows up in subtle but powerful ways. Here are common real-world examples:

Example 1: Definition Standardization

If most authoritative pages define a term in the first 40–60 words, AI answers often open with a concise definition mirroring that learned pattern.

Example 2: Step-Based Explanations

Topics frequently explained using step-by-step formats lead LLMs to default to numbered lists in responses.

Example 3: Brand Concept Pairing

When a brand is consistently associated with a specific solution or framework, AI begins to reference that brand contextually, even without a direct citation.

Example 4: Question Answer Reinforcement

Pages that clearly answer common questions help models learn which phrasing resolves which intent, improving answer accuracy in future queries.

These are not coincidences. They are outcomes of content training signals accumulated across thousands of similar pages.

How to Create Training-Friendly Content

Creating content that benefits LLM learning does not require manipulation; it requires discipline.

Best practices for training friendly pages:

- Start with a clear, literal explanation before adding nuance

- Use consistent terminology throughout the page

- Align headings, body copy and examples around one core intent

- Avoid unnecessary contradictions or speculative statements

- Reinforce key points using summaries, bullets, or FAQs

From an AIO and AEO perspective, the goal is to teach the model how to answer, not just to attract clicks.

When content is written this way, it supports:

- Better inclusion in AI summaries

- Reduced risk of misrepresentation

- Stronger long-term visibility in generative systems

This approach aligns naturally with GEO strategies, where presence inside matters more than traditional rankings.

FAQs: Prompt Learning and AI Content Training

Does AI learn from my content?

AI does not memorize individual pages, but it learns patterns from high-quality, publicly available content during training and reinforcement cycles.

How do I create training-friendly pages?

Focus on clarity, structured explanations, consistent terminology and direct answers aligned to user intent.

Is this the same as fine-tuning a model?

No. This is indirect fine-tuning, where content influences learned patterns without direct model access.

Does this help with AI search visibility?

Yes. Training-friendly content improves how AI systems summarize, reference, and explain topics key to AIO and AEO success.