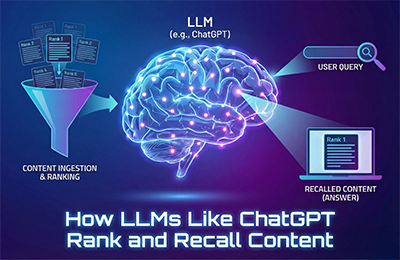

Large Language Models (LLMs) like ChatGPT don’t “rank” content the way Google does. Instead, they predict the most useful answer based on patterns learned during training, fact structure, entity clarity, semantic relevance, social proof and how consistently an idea appears across the web.

This blog explains how LLMs rank content, what improves LLM recall signals, why generative ranking factors matter for brands and what this means for AIO (Artificial Intelligence Optimization).

How LLMs Rank Content

LLMs don’t crawl and index the web like a search engine. They learn from massive training datasets, then generate answers based on what they’ve learned plus any new, real-time retrieval tools they have access to.

When a user asks a question, the model predicts the most reliable answer from patterns, entities and relationships stored in its internal representation of the world.

This is why AIO (Artificial Intelligence Optimization) is becoming essential.

Brands now need to structure information so LLMs can easily understand, recall and reuse it in generated answers.

Below is the simplest breakdown of the factors that influence how LLMs recall and rank content.

Training Datasets

LLMs learn from a wide mix of sources public pages, licensed datasets, books, academic papers, code repositories and more.

These datasets shape what the model knows and how it prioritizes information.

What matters for ranking inside ChatGPT and similar models?

- High-quality sources appear more often in training, so they become more “trusted.”

- Clear, factual, structured writing increases learnability.

- Content cited across many places becomes more prominent in the model’s memory.

This is why repetition across trusted sources helps LLM recall.

For deeper technical notes, OpenAI provides training insights in its research papers.

Entity Detection

Entities are the backbone of how LLMs interpret content brands, people, products, industries, services, locations, publications, events.

If an LLM clearly understands:

- What your brand is,

- What you do,

- Where you fit in the category,

It can more reliably include you in generated answers.

Why entities matter for LLM ranking:

- They help the model form stable “knowledge nodes.”

- They improve recall because the model connects your entity with relevant topics.

- They reduce ambiguity one of the biggest blockers to LLM visibility.

Example:

If your site consistently presents your brand as “a GEO specialist helping enterprises optimize for generative engines,” the model stores that association, improving recall for generative search topics.

Semantic Relevance

Semantic relevance means how closely your content matches the intent of the question.

LLMs weigh:

- The clarity of your explanations

- The simplicity of your definitions

- The presence of supporting examples

- Whether your content answers the kind of questions people typically ask

In simple terms:

LLMs prefer content that is easy to reuse and easy to adapt into natural-sounding answers.

This is where the secondary keyword generative ranking factors comes in.

Models give more weight to content that fits the “shape” of a good generated answer.

That means:

- short definitions

- bullet-point frameworks

- clear steps

- consistently phrased explanations

The simpler and more structured your content, the higher the recall probability.

Social Signals

While LLMs don’t directly track likes or shares, social signals influence:

- What gets talked about online

- What appears in training datasets

- What becomes a widely accepted fact

If an idea spreads widely online through blogs, conversations, comments and podcasts, it gains “weight” inside the model because:

- It appears more often in training data

- It becomes part of the model’s understanding of “consensus knowledge.”

This is why brands with active content ecosystems tend to see stronger LLM recall over time.

Freshness

Generative models aim to give up-to-date answers, but they have limits based on training cycles.

However, freshness still influences ranking:

- If your content appears frequently in new sources, it strengthens the model’s probability of recalling it.

- If real-time tools retrieve your webpage, freshness becomes a ranking factor during retrieval.

In simple words:

Consistent publishing helps ChatGPT remember you.

Examples of freshness signals:

- Updated definitions

- New case studies

- Revised statistics

- Recent industry examples

LLMs can’t follow trends like humans but they can recognize patterns in updated information.

Consistency Across the Web

This is one of the strongest LLM recall signals.

If your brand describes itself differently on every platform, LLMs struggle to form a stable mental representation of you.

But if your:

- website

- bios

- social pages

- press releases

- directory listings

- About Us statements

All use the same terminology and narrative, the model forms a clear, consistent entity.

Consistency improves:

- recall

- trust

- ranking probability

- answer inclusion

This is the foundation of AIO (Artificial Intelligence Optimization).

Implications for AIO

AIO focuses on making your brand visible, understandable and reusable inside AI systems.

Here I explain how LLMs rank content feeds directly into the AIO strategy.

AIO requires brands to optimize:

✔ Entities

Clear, consistent brand definitions.

✔ Structured Content

Clear, simple, reusable explanations.

✔ Multi-platform Consistency

Same story across web properties.

✔ Authority Signals

Citations, mentions, expert references.

✔ Fresh Content

Updated insights strengthen model memory.

✔ High-quality Sources

Features, PR, and thought leadership contribute to training datasets.

As LLMs become a primary discovery channel, AIO ensures your content is “recall-ready” for generative answers not just search rankings.

FAQs

1. How does ChatGPT pick sources?

ChatGPT relies on patterns learned from large training datasets and real-time retrieval tools. It prioritizes clear, consistent, factual content from high-quality sources.

2. What affects LLM rankings?

Entity clarity, semantic relevance, content structure, consistency across the web, freshness and how often your ideas appear in reliable sources.

3. Do LLMs use backlinks the same way Google does?

No, LLMs don’t rank pages based on backlinks. However, widely cited content appears more often in training data, which indirectly improves recall and credibility.

4. How can brands improve their chances of being mentioned in AI-generated answers?

By publishing clear, consistent, fact-driven content across multiple platforms. So, the model can easily understand, trust and reuse the brand’s information.