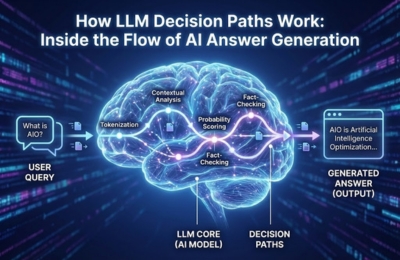

Large Language Models don’t “think” like humans, but they do follow structured internal pathways to generate answers. These pathways, often described as LLM decision trees, determine how AI selects reasoning routes, weighs prior knowledge, evaluates source authority and produces final outputs. This behind-the-scenes flow explains why some brands are cited accurately, others are misunderstood and how content creators can influence AI answer generation through optimization for inference behavior.

LLM Decision Paths

When an AI model like a large language model produces an answer, it isn’t guessing or improvising. Every response is the result of a structured decision flow shaped by training data, probability weighting, contextual signals and learned reasoning patterns.

Understanding LLM decision paths gives marketers, SEOs and content strategists a rare look into how AI systems assemble answers token by token before presenting them as confident, fluent responses.

At the core of this process sits the LLM decision tree: a conceptual framework that explains how models navigate multiple possible answer routes and select the most statistically and contextually appropriate one.

This matters because AI-driven search, answer engines and overviews don’t simply retrieve information; they generate it. And generation depends entirely on how decision paths are formed and reinforced.

How LLMs Choose Reasoning Routes

LLMs generate answers by predicting the most likely next token based on context. But at scale, this prediction behaves less like a single straight line and more like a branching structure.

Each prompt activates multiple potential reasoning routes. The model evaluates them simultaneously, assigning probability weights based on:

- Contextual relevance of prior tokens

- Learned associations from training data

- Confidence signals derived from authoritative patterns

- Internal alignment with known concepts

The route that produces the highest overall probability coherence becomes the final answer path.

This is where AI inference plays a critical role. Inference is not retrieval; it’s synthesis. The model infers what should come next, not what exists verbatim in a database.

As a result, two prompts that look similar to humans can trigger entirely different reasoning routes inside the model.

Concept of Decision Trees in AI

In classical AI, decision trees are explicit structures with defined branches and outcomes. In LLMs, decision trees are implicit, but the logic still applies.

Think of an LLM decision tree as a probabilistic map:

- Each node represents a possible semantic direction

- Each branch represents a reasoning continuation

- Each leaf represents a finalized answer state

Unlike traditional decision trees, LLM trees are dynamic. They adapt based on:

- Prompt phrasing

- Prior conversational context

- Topic familiarity

- Depth of learned associations

This explains why AI can sometimes answer confidently and other times hedge, generalize, or refuse. The decision tree determines whether a high-confidence path exists or whether uncertainty dominates the branching process.

Influence of Prior Knowledge

One of the most overlooked elements in LLM reasoning is prior knowledge density.

LLMs are trained on massive datasets, but they don’t treat all topics equally. Topics with:

- Repeated, consistent explanations

- Stable terminology

- High agreement across sources

create stronger internal pathways.

When a prompt aligns with these well-trained patterns, the decision tree becomes narrow and confident. When information is sparse, contradictory, or fragmented, the tree widens, leading to cautious or diluted answers.

This is why brands with inconsistent messaging often experience AI misrepresentation. The model isn’t confused; it’s navigating a fragmented decision tree built from conflicting inputs.

Role of Source Authority

Not all data contributes equally to AI decision paths. Source authority acts as a weighting factor inside the decision tree.

During training and fine-tuning, models learn to associate certain signals with reliability, such as:

- Repetition across credible sources

- Structured explanations

- Clear definitions early in the content

- Consistent entity relationships

When generating answers, the model unconsciously favors reasoning paths that resemble these authoritative patterns.

This is why references aligned with research-driven documentation, such as insights from OpenAI technical reports, often shape how models frame explanations, terminology and tone.

For AIO practitioners, this means authority isn’t just about backlinks or citations. It’s about training the model’s internal confidence in your narrative.

Implications for AIO

Understanding answer path generation changes how content should be created for AI-driven visibility.

Optimizing for AIO is no longer about ranking a page; it’s about influencing decision trees.

Practically, this means:

- Structuring content so reasoning flows logically

- Reinforcing a single, consistent explanation across pages

- Avoiding contradictory definitions or fragmented messaging

- Supporting AI overviews optimization through clear topic hierarchies

When your content aligns with how LLMs build and traverse decision trees, you reduce ambiguity and increase the likelihood that AI selects your narrative as the preferred answer path.

In short, AIO success depends on becoming the default reasoning route inside the model.

FAQs

How does AI decide answers?

AI decides answers by evaluating multiple possible reasoning paths and selecting the one with the highest probability coherence based on context, training data, and learned authority signals.

What is an LLM decision tree?

An LLM decision tree is a conceptual model describing how large language models branch through possible reasoning routes before generating a final answer.

Why do AI answers sometimes change for the same question?

Small changes in wording, context, or prior conversation can activate different reasoning paths within the decision tree, leading to different outputs.

Can content influence AI reasoning paths?

Yes. Consistent structure, authoritative tone and clear definitions strengthen specific reasoning routes, increasing the chance AI follows your narrative.