AI models do not absorb the entire web. They rely on strict training data filtering systems that decide which pages are eligible for ingestion, reuse and citation. Content is excluded when it fails quality, trust, clarity, or structural thresholds. This guide explains how AI models filter web data, why pages get excluded and how to design content that consistently qualifies for AI dataset inclusion using clear signals, strong structure and AIO-aligned practices.

AI Training Data Filters

AI-powered search and generative systems are selective by design. Contrary to popular belief, visibility inside AI answers is not a reward for publishing more content; it is the result of passing multiple filtration layers applied long before responses are generated.

Training data filtering determines whether your content is even eligible to be learned, referenced, summarized, or recalled by large language models. If your pages fail these filters, they are effectively invisible to AI systems, regardless of how well they rank in traditional search.

This makes filtration awareness a foundational requirement for modern AIO, AEO & GEO strategies.

How AI models filter web data

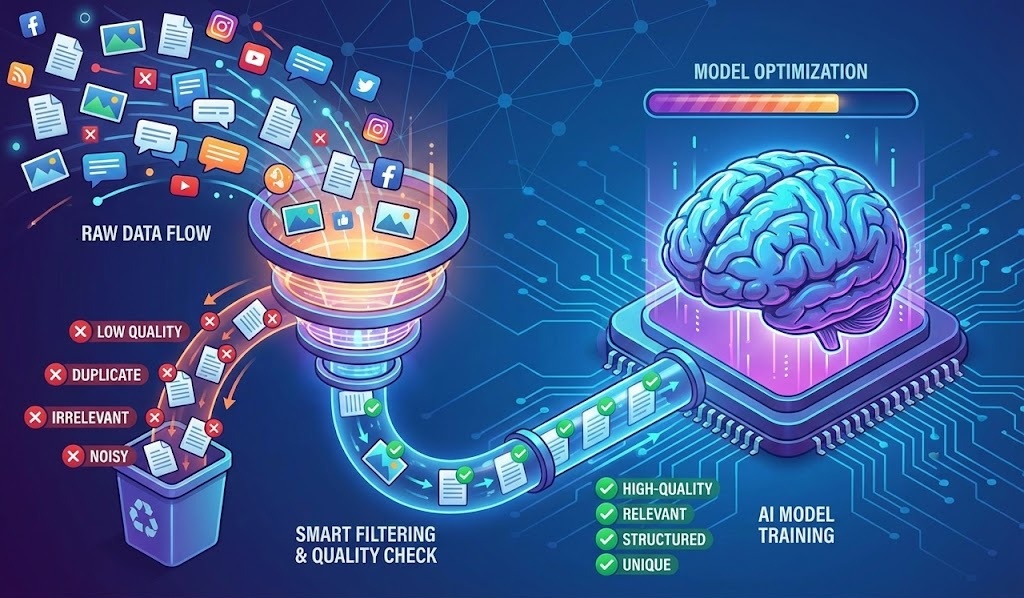

AI models rely on multi-stage filtering pipelines when evaluating web content for training or reuse. These pipelines are designed to reduce noise, risk and ambiguity at scale.

At a high level, filtering evaluates three core dimensions:

Source trustworthiness

Models prioritize domains and pages that demonstrate consistency, authorship clarity and topical stability over time.

Content clarity and structure

Unstructured, fragmented, or context-poor pages are harder for models to interpret and are often filtered out early.

Semantic usefulness

AI systems prefer content that explains concepts clearly, defines terms and provides reusable insights rather than thin or purely promotional material.

During this process, signals are aggregated across crawls, historical snapshots and known datasets. Content that repeatedly fails to meet minimum thresholds is progressively deprioritized or excluded entirely.

This is where content filtration AI becomes decisive: filtration is not a one-time event, but an ongoing evaluation.

What causes exclusion

Most exclusions are not punitive; they are preventative. AI models aim to avoid unreliable, low-signal, or high-risk data.

Common causes include:

- Ambiguous authority: Pages with no clear author, organization, or topical ownership struggle to establish credibility signals.

- Low informational density: Content that is verbose but shallow, repetitive, or padded with filler fails usefulness thresholds.

- Inconsistent semantics: Contradictory claims, unclear definitions, or frequent topic drift confuse models and reduce reuse confidence.

- Over-optimization patterns: Keyword stuffing, templated phrasing, or excessive internal repetition can trigger filtration heuristics.

- Structural weakness: Poor heading hierarchy, missing context, or lack of explanatory depth make parsing difficult at scale.

When these issues accumulate, the AI dataset inclusion probability drops sharply even if the page remains indexed in search engines.

How to ensure inclusion

Inclusion is achieved by designing content for interpretability, not manipulation. The goal is to make your page easy for AI systems to understand, trust and reuse.

Effective practices include:

- Explicit topical framing

State clearly what the page explains, who it is for and why it exists. - Consistent entity signals

Use stable naming, definitions and references so models can anchor meaning reliably. - Explanatory depth

Prioritize clarity over cleverness. Simple explanations of complex topics outperform vague expert jargon. - Structural transparency

Use predictable heading logic and coherent paragraph progression to aid machine parsing. - AIO training signals alignment

Ensure your content supports downstream reuse in summaries, answers, and citations rather than one-off consumption.

These steps do not guarantee inclusion, but they significantly increase eligibility across filtration stages.

Quality thresholds

AI systems apply implicit quality thresholds rather than explicit scores. While exact benchmarks are undisclosed, consistent patterns are observable.

High-performing content typically demonstrates:

- Original synthesis

Not just aggregation, but interpretation and explanation. - Stable factual grounding

Clear definitions, scoped claims and absence of speculative language where facts are expected. - Narrative coherence

Logical flow from premise to conclusion without abrupt topic shifts. - Low noise-to-signal ratio

Every paragraph contributes meaning; filler content is minimal.

Meeting these thresholds positions your content as reusable training material rather than disposable web copy.

Inclusion checklist

Use the following checklist to self-audit your pages for training eligibility:

- Clear page purpose and scope

- Defined author or organizational ownership

- Logical H1–H3 structure

- Consistent terminology and definitions

- Informational depth beyond surface-level explanations

- Minimal repetition or padding

- Alignment with training data filtering expectations

This checklist supports long-term eligibility, not just short-term visibility.

Internal and external context

For broader AI search visibility, this topic connects directly with Google AI Overviews and with strategic frameworks such as AIO, AEO & GEO, which focus on making content understandable and reusable across AI-driven discovery systems.

For external reference on how training datasets are governed, review OpenAI Dataset Policies, which outline high-level principles behind dataset selection, safety and quality control.

FAQs

Why do some pages get excluded from AI training?

Pages are excluded when they lack clear authority, provide low informational value, or fail structural and semantic quality thresholds required for safe and useful training data.

Does ranking on Google guarantee AI inclusion?

No. Search ranking and AI dataset eligibility are evaluated differently. High-ranking pages can still fail filtration if they lack clarity or reuse value.

Can older content be included later?

Yes. AI systems reassess data sources over time. Improving structure, clarity and trust signals can increase future inclusion likelihood.

Is AI filtering the same across ChatGPT, Gemini, and Perplexity?

No. Each system applies different filtration criteria and reuse strategies, even when trained on overlapping data sources.

Conclusion

AI visibility begins long before an answer is generated. Training data filtering determines whether your content is even considered worthy of learning. By focusing on clarity, structure and genuine explanatory value, you shift from chasing rankings to earning inclusion.

Content that respects filtration logic becomes durable, capable of being recalled, summarized and trusted across AI systems.