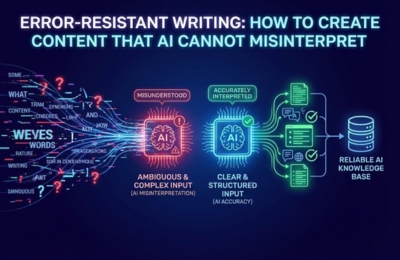

AI systems don’t “misread” content the way humans do; they misinterpret structure, ambiguity and context gaps. AI-safe writing focuses on clarity, explicit intent and controlled context so large language models can extract meaning without distortion. This guide explains why AI misinterpretation happens, how ambiguous writing triggers errors and how to apply clarity frameworks and templates to produce AI error-proof content that remains accurate across search engines, AI assistants and generative answer systems.

Error-Resistant Writing

Error-resistant writing is the discipline of designing content so that AI systems interpret it exactly as intended, without hallucination, misclassification, or context drift. As AI-generated answers increasingly replace traditional search listings, content must now perform two jobs simultaneously: persuade human readers and remain structurally reliable for machines.

Unlike human readers, AI does not infer intent through emotion or intuition. It relies on sentence structure, entity clarity, semantic consistency and contextual signals. When those elements are weak, even well-written content can be misunderstood or reused incorrectly.

This is why AI-safe writing has become a foundational requirement for AIO, AEO, and GEO strategies not just an editorial preference.

Why AI Misinterprets Content

AI misinterpretation usually occurs not because information is wrong, but because it is underspecified. Large language models predict meaning from patterns, not intent.

Common causes include:

- Missing subject-object clarity in sentences

- Overloaded paragraphs covering multiple ideas

- Implicit assumptions that humans fill in, but AI cannot

- Inconsistent terminology across sections

Research from Stanford University on AI writing analysis shows that LLMs are more likely to distort meaning when content blends explanation, opinion and instruction in the same structural unit.

From an AI perspective, ambiguity creates competing interpretations. The model resolves this conflict probabilistically, often producing answers that sound correct but are contextually wrong.

This is why multi-format AI training, LLM authority ranking systems and modern AIO frameworks prioritize clarity over creativity.

Ambiguous Sentence Pitfalls

Ambiguous writing is the fastest way to break AI comprehension. A sentence that works for humans may fail for machines if roles, scope, or conditions are unclear.

Example of ambiguity:

“This framework improves accuracy when applied correctly.”

Questions an AI may ask internally:

- What framework?

- Improves the accuracy of what?

- Under which conditions?

Error-resistant rewrite:

“This clarity framework improves AI content interpretation accuracy when applied to informational blog content during initial drafting.”

The second version removes guesswork. This is the essence of clarity writing, making every dependency explicit.

High-risk ambiguity patterns include:

- Pronouns without clear antecedents

- Vague modifiers like better, faster, and effective

- Conditional phrases without stated triggers

Left unchecked, these patterns lead directly to AI error-proof content failures, especially in zero-click environments.

Clarity Frameworks

Clarity frameworks are structured writing systems that constrain interpretation pathways for AI while maintaining readability for humans.

A practical AI-safe clarity framework includes:

- Single intent per paragraph – one topic, one purpose

- Explicit subject mapping – name entities, avoid “this/that.”

- Stable terminology – one concept, one label

- Context anchoring – define the scope beforethe explanation

These principles align directly with AIO, AEO and GEO strategies, where content is evaluated for reusability inside AI answers rather than traditional rankings.

Well-structured clarity frameworks also reinforce LLM authority ranking, because consistent phrasing increases semantic confidence across retrieval systems.

Reducing Contextual Risk

Contextual risk refers to the chance that AI extracts a statement outside its intended boundaries. This is one of the most common causes of hallucinated summaries and incorrect citations.

To reduce contextual risk:

- Place definitions before analysis

- Separate factual claims from interpretation

- Avoid stacking multiple conclusions in one section

- Restate the scope when transitioning between ideas

For example, content optimized for multi-format AI training must remain consistent whether it appears as a paragraph, snippet, table, or conversational answer. If meaning changes when format changes, contextual risk is high.

AI-safe writing minimizes this risk by treating every section as potentially reusable in isolation.

Templates

Below are error-resistant templates designed specifically for AI-safe writing and unambiguous content reuse.

Definition Template

- [Concept] refers to [explicit meaning] within the context of [scope or application].

Instruction Template

- To [desired outcome], apply [method] during [specific stage] using [defined inputs].

Explanation Template

- This process works because [cause] leads to [effect] under [conditions].

Comparison Template

- Unlike [alternative], [primary option] focuses on [key distinction], resulting in [outcome].

These templates dramatically reduce interpretation variance across AI systems while preserving human readability.

FAQs

How to avoid AI misunderstanding content?

Avoid implicit assumptions. Use explicit subjects, stable terminology and single-intent paragraphs so AI can extract meaning without guessing.

What is AI-safe writing?

AI-safe writing is content structured to prevent misinterpretation by language models through clarity, context control and unambiguous phrasing.

Why does AI misinterpret well-written content?

Because AI evaluates structure and semantics, not intent. Content written for humans often relies on implied meaning that AI cannot reliably infer.

Does clarity writing reduce SEO performance?

No. Clarity writing improves AI visibility, featured snippet eligibility and reuse across generative search systems while maintaining human engagement.