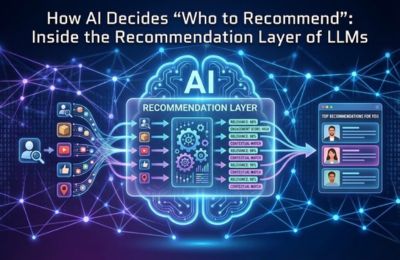

Large Language Models don’t “randomly” recommend brands, tools, or services. Behind every suggestion sits an AI recommendation layer, a complex decision system that blends relevance, trust, consistency and authority signals. This article pulls back the curtain on how that layer works, why certain brands appear again and again and what you can do to increase your chances of being recommended by LLMs like ChatGPT, Gemini, Claude and Perplexity.

AI Recommendation Layer

When users ask AI systems for advice, “Which tool should I use?”, “Who’s best for this?”, “What brand do you recommend?” They assume the response is neutral and instant.

In reality, that answer is the output of a layered evaluation process known as the AI recommendation layer.

This layer sits above raw information retrieval. It decides who gets surfaced, who gets ignored, and who gets consistently recommended across thousands of conversations.

Understanding this layer is no longer optional for brands that want visibility inside AI-powered search and suggestion environments.

What recommendation systems do

At a foundational level, recommendation systems exist to reduce uncertainty for the user.

In traditional platforms (Netflix, Amazon, Spotify), recommendations are driven by behavior, similarity and historical outcomes. LLM-based systems extend this logic but with a critical twist.

Instead of recommending content, LLMs often recommend entities:

- Brands

- Tools

- Platforms

- Experts

- Services

The LLM suggestion engine doesn’t simply look for keywords. It synthesizes patterns from its training data and live retrieval layers to answer one core question:

Which option is most likely to satisfy this user intent with the least risk of being wrong?

This is why AI recommendations often feel conservative, familiar and authority-driven.

Key functions of the recommendation layer include:

- Filtering low-confidence or low-consensus entities

- Prioritizing entities with strong contextual fit

- Avoiding recommendations that could be misleading or harmful

- Reinforcing previously validated answers

This is not ranking in the SEO sense. It’s closer to probabilistic trust modeling.

Hidden factors AI uses

The most important signals used by the AI recommendation layer are rarely visible in analytics dashboards. They emerge from how language models learn and reinforce patterns at scale.

Here are the hidden factors that matter most.

1. Entity consistency

If a brand or concept appears consistently described the same way across multiple sources, the model develops higher confidence in it.

Conflicting descriptions reduce recommendation likelihood even if SEO metrics look strong.

2. Contextual relevance density

It’s not how often you’re mentioned, it’s where you’re mentioned.

Mentions that appear inside:

- Explanatory articles

- Comparisons

- Use-case discussions

- Problem–solution narratives

carry more recommendation weight than directory-style mentions.

3. Consensus reinforcement

LLMs favor entities that appear repeatedly across independent sources, saying roughly the same thing.

This is why brands that show up in:

- Expert blogs

- Industry explainers

- Q&A-style content

are more likely to be suggested than brands that only publish self-promotional pages.

4. Narrative stability

If a brand’s role is clear, what it does, who it’s for and when to recommend it, AI systems can confidently insert it into answers.

Ambiguous positioning leads to omission.

5. Risk minimization bias

LLMs are trained to avoid hallucination and user harm. Recommending an obscure or weakly validated brand increases risk.

As a result, the recommendation layer prefers:

- Established entities

- Clearly defined offerings

- Verifiable claims

This bias explains why “safe” brands dominate early AI recommendations.

Why do some brands get recommended more

From the outside, it looks like favoritism.

From inside the model, it’s signal accumulation.

Brands that dominate AI recommendations usually share the same structural advantages.

They’ve been “seen” in the right contexts

These brands appear in educational content, not just landing pages. They are referenced while explaining concepts, not merely selling solutions.

This aligns closely with AI overviews optimization, where context-rich mentions influence generative summaries.

Their messaging is stable across platforms

Their brand story doesn’t change dramatically from site to site. This consistency makes them easier for LLMs to encode and retrieve.

They match clear user intents

When a user asks a question, the model looks for entities that historically align with that question type.

Brands optimized for AIO (Artificial Intelligence Optimization) often outperform those focused only on keyword rankings.

They benefit from reinforcement loops

Once a brand is recommended and appears in multiple generated answers, it reinforces its own probability of being recommended again.

This creates a compounding visibility effect similar to but distinct from traditional SEO authority.

How to improve your recommendation score

You can’t directly “optimize” the AI recommendation layer the way you tweak meta tags. But you can influence the signals it consumes.

Here’s how expert teams approach it.

Build explanatory authority, not just pages

Create content that teaches, not just promotes. LLMs learn better from explanations than from sales copy.

This is where AEO and GEO strategies intersect with recommendation systems, answer clarity feeds suggestion confidence.

Control your entity narrative

Ensure your brand is described consistently:

- What you do

- Who you for

- When should you be recommended

This reduces ambiguity in the model’s internal representations.

Expand contextual presence

Aim to be mentioned inside:

- How-to guides

- Industry breakdowns

- Comparative discussions

These mentions feed the AI ranking flow far more effectively than isolated backlinks.

Reduce semantic noise

If your content repeats the same points without adding depth, it weakens trust signals. LLMs penalize redundancy more than humans do.

Align internal linking with AI logic

Use internal links that reinforce topical depth and clarity, such as:

- AI overviews optimization

- AIO

- AEO and GEO

This strengthens your site’s conceptual graph, which LLMs indirectly absorb.

For deeper technical insight into recommendation research, see OpenAI RecSys Research.

Examples

Example 1: Tool recommendations

When users ask, “What’s the best tool for X?”, LLMs rarely scan the web live. They rely on previously reinforced patterns.

Tools that appear consistently in:

- Tutorials

- Expert walkthroughs

- Comparison articles

are far more likely to surface than tools with aggressive ads but thin educational presence.

Example 2: Service providers

In professional services, recommendations skew toward brands that:

- Publish deep, instructional content

- Appear in thought leadership contexts

- Have a narrow, well-defined positioning

Generalists with unclear messaging are frequently excluded.

Example 3: Emerging brands

New brands can break into recommendations, but only when they:

- Attach themselves clearly to a niche

- Appear alongside established entities in explanations

- Avoid exaggerated or unverifiable claims

This is why behind-the-curtain optimization matters more than surface-level visibility.

FAQs

How does AI choose who to recommend?

AI systems rely on an internal recommendation layer that evaluates contextual relevance, consistency, authority signals and risk. Brands that appear repeatedly in trusted, explanatory contexts are more likely to be suggested.

Is the AI recommendation layer the same as search ranking?

No. Search ranking prioritizes relevance and authority signals for pages, while the AI recommendation layer prioritizes confidence and consensus around entities.

Can small brands get recommended by LLMs?

Yes, but only when their positioning is clear, their messaging is consistent and they appear in educational or problem-solving contexts rather than pure promotion.

Does SEO still matter for AI recommendations?

SEO matters indirectly. Strong SEO improves discoverability, but recommendation systems depend more on narrative clarity, topical authority and contextual reinforcement.