As AI-powered search becomes the primary discovery layer, brands face a new technical challenge: different large language models (LLMs) often describe the same company in conflicting ways. This guide explains why cross-LLM inconsistency happens, how to detect it and how enterprise teams can engineer alignment so ChatGPT, Gemini and Claude reliably present the same facts, positioning and authority signals about your brand.

Cross-LLM Consistency

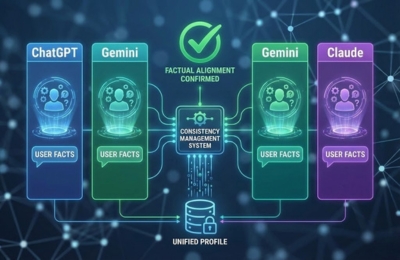

Cross-LLM consistency refers to the practice of ensuring that multiple AI models, such as ChatGPT, Gemini and Claude, provide the same factual, contextual and narrative information about your brand.

In traditional SEO, ranking discrepancies were mostly about position. In AI-driven discovery, discrepancies are about truth itself. One model may describe your company as an enterprise platform, another as a mid-market service provider and a third may misattribute your core offerings altogether.

For enterprise brand teams, this is no longer a theoretical risk. AI models are now trusted intermediaries between your brand and decision-makers, investors, partners and customers. When those intermediaries disagree, credibility erodes.

Cross-LLM consistency is therefore not a branding exercise alone. It is a technical discipline that intersects brand consistency AI, AIO alignment and long-term multi-model alignment strategies.

Why AI models provide conflicting answers

LLMs do not share a single memory, training dataset, or update cycle. Each model builds its understanding of your brand independently, which creates divergence over time.

Several structural factors drive this problem:

- Training data variance: ChatGPT, Gemini and Claude ingest different public, licensed and synthetic datasets. If your brand messaging is inconsistent across the web, each model may learn a different “truth.”

- Temporal drift: Models are updated at different intervals. One model may reflect your latest rebrand product pivot or positioning shift, while another still references outdated information.

- Retrieval bias: When generating answers, models prioritize sources they consider authoritative. If one model associates your brand with press coverage and another with forum mentions, their outputs will differ even if the underlying facts overlap.

- Prompt interpretation differences: Each model interprets user intent differently. A query like “What does this company do?” may trigger a technical explanation in one model and a marketing-style summary in another.

These inconsistencies are not bugs. They are emergent properties of how large language models reason over fragmented information.

How to detect inconsistencies

Most brands do not realize they have a cross-LLM problem until prospects flag contradictions. By then, the damage is already visible.

Detection must be intentional and systematic.

Start with parallel querying. Ask the same factual questions about your brand across ChatGPT, Gemini and Claude using neutral, non-leading prompts. Document differences in descriptions, product scope, leadership attribution and market positioning.

Next, perform entity consistency audits. Compare how each model defines your brand entity: industry category, competitors, value proposition and geographic footprint. Even small wording differences can signal deeper alignment issues.

Then evaluate confidence and certainty language. Models often hedge when information is unclear. Phrases like “appears to,” “may be,” or “is believed to” indicate weak knowledge and confidence.

Finally, track change velocity. If one model updates its understanding after a content release or press announcement and others do not, you have uneven signal propagation.

This diagnostic phase mirrors what advanced teams already do for LLM authority ranking and brand authority AI, but applied horizontally across models instead of vertically within one ecosystem.

Consistency engineering techniques

Once inconsistencies are identified, the solution is not to “optimize for ChatGPT” or “fix Gemini.” The solution is to engineer clarity at the source.

The most effective technique is the canonical truth definition. Your brand must have a single, authoritative articulation of who you are, what you do and how you should be described. This truth must be expressed consistently across high-authority content surfaces.

Structured content plays a critical role here. Clear schema usage, consistent naming conventions and unambiguous descriptors reduce model interpretation variance. This is where AIO alignment intersects directly with technical SEO and semantic clarity.

Another technique is narrative compression. Long, marketing-heavy explanations create room for misinterpretation. Concise, repeatable explanations of your core offering are more likely to be learned consistently across models.

Finally, reinforce entity associations. Explicitly connect your brand to the same industries, use cases and problem spaces across authoritative pages. Models rely heavily on these co-occurrence signals when generating summaries.

These techniques align closely with modern AEO & GEO practices, where clarity and repeatability outperform creativity in AI comprehension.

Multi-model monitoring workflow

Cross-LLM consistency is not a one-time fix. It requires an ongoing monitoring workflow.

Enterprise teams should establish a quarterly or, in fast-moving sectors, a monthly review cycle. During each cycle, core brand questions are tested across models and compared against a defined “truth baseline.”

Outputs should be scored on accuracy, completeness and confidence. Any deviation is logged, not ignored.

This workflow often integrates with existing brand authority AI dashboards and content governance processes. The difference is scope: instead of monitoring search rankings or sentiment, teams monitor AI memory alignment.

Some organizations also map model responses to internal content updates, enabling them to see which changes propagate successfully and which stall. Over time, this creates a feedback loop that improves multi-model alignment predictability.

Correction steps

When inconsistencies are found, correction must be precise and measured.

First, update authoritative sources, not fringe mentions. Models learn from high-signal pages, not scattered corrections. Focus on core brand pages, structured knowledge hubs and trusted third-party references.

Second, eliminate ambiguity. Remove outdated language, overlapping positioning, or contradictory claims. Consistency is often restored by subtraction, not addition.

Third, reinforce corrected narratives through repetition across trusted contexts. Models learn through pattern density. The same truth expressed clearly in multiple high-authority locations strengthens recall.

Finally, allow time for propagation. LLM updates are asynchronous. Immediate correction across all models is unrealistic, but gradual convergence is measurable.

This correction cycle mirrors principles found in both OpenAI and Anthropic research on model consistency and alignment, which emphasize stable inputs over reactive adjustments.

FAQs

How do I keep my brand consistent across AI models?

Maintain a single canonical brand narrative, reinforce it through structured, authoritative content and monitor how multiple LLMs describe your brand over time.

Why does ChatGPT say something different from Gemini about my company?

Each model uses different training data, update cycles and retrieval logic, which can lead to divergent interpretations if brand signals are inconsistent.

How often should I audit AI model outputs?

Enterprise teams should audit quarterly at a minimum and monthly if the brand undergoes frequent updates or operates in a fast-changing market.

Can cross-LLM inconsistency affect trust and conversions?

Yes. Conflicting AI-generated descriptions reduce credibility, create buyer hesitation and weaken perceived authority in AI-driven decision journeys.

Conclusion

Cross-LLM consistency management has become an essential technical function for enterprise brands operating in an AI-first discovery landscape.

When ChatGPT, Gemini and Claude disagree about who you are, the issue is not perception; it is signal integrity. By detecting inconsistencies early, engineering clarity at the source and maintaining a disciplined multi-model monitoring workflow, brands can ensure that AI systems reinforce rather than fragment their authority.

In the era of AI-mediated trust, consistency is not optional. It is infrastructure.